Today, we sit down with one of Meilisearch’s Rust developers, Many, to talk about the work he’s been doing with Charabia to improve Meilisearch’s language support.

A bit of historical context

Though Many’s first contact with Meilisearch’s language parsing started two years ago, he only began focussing on tokenization around the end of 2021. You might be asking yourself: what is tokenization? Well, Many tells us it is one of the most important steps when indexing documents and, in short, means breaking down search terms into units our engine can process more efficiently. The part of Meilisearch’s engine that takes care of tokenization is called a tokenizer.

Many felt the way we handled content in different languages wasn’t efficient and that, even if Meilisearch already worked very well with languages like English and French, the same could not be said about other language groups. Many explains that a lot of it comes down to the different ways we type in different languages, as well as the various ways in which we make mistakes when writing in them. A typo in Japanese or Chinese, for example, might follow a different logic than a typo in Italian or Portuguese.

A different perspective

One main issue was immediately clear to Many: it would be impossible for us at Meilisearch to master all the languages in the world and improve our tokenizer alone. Luckily, the open-source community is a diverse and generous bunch, with people from literally all over the world fluent in more languages we can possibly name. So Many’s focus shifted from directly improving language support to making contributing as simple and painless as possible.

Two main aspects go into improving a language with our tokenizer. First, we can work on segmentation, which means understanding where a word starts and where it ends.

“It appears pretty obvious for English or European speakers: “split on white spaces and you get your words!"; but it becomes harder when you face other types of Languages like Chinese, where there is no explicit separator between characters”

Second, we can work on normalization, which encompasses canonical modifications (upper or lower case), compatible equivalence (recognising the same character under different forms; e.g: ツ and ッ), and transliteration (transposing from one alphabet to the other, e.g.: Cyrillic into Latin)—we avoid this last one as much as possible because it often results in information loss.

“Here it’s the same, each Language has its specificities and we have to adapt the normalization for each Language. Take the case of capitalization: we have two versions of each Latin character, a capitalized version, and a lowercased version, but this specificity doesn’t exist for all Language in the world!”

Many’s job, then, is less about writing a lot of code, and more about researching and understanding the ins and outs of a particular language so he can judge whether a contribution is going to work well with Meilisearch.

He explains:

“This all boils down to the contrast between traditional translation and a field called information research (IR). Whereas translation focuses on preserving meaning, IR can afford to be less strict and account for mistakes such as misspelled words. Focussing on IR over translation allows us to offer search options that might be irrelevant in translation work.”

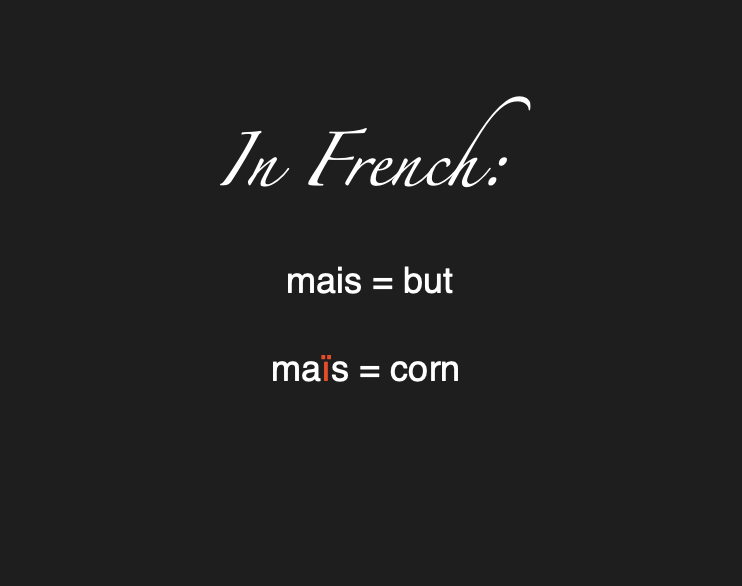

If a user typed “mais,” they would see results for both “but” and “corn” because Meilisearch takes into account that a user might have forgotten or simply did not feel like using the appropriate accent when typing.

So, whenever we wish to improve support for a language, it’s not only about having the right language experts contributing to our project. It also involves an enormous investment of research time on Many’s side, so he can determine whether a change to the tokenization process of a specific language is going to return more relevant search results.

What languages have we worked on so far?

As you might have read on our blog post about v0.29 release, in the past two months we’ve done extensive work on improving our support for Thai. This is a feature we’re very proud of and which was only possible thanks to the open-source community. There’s a lot of room for improvement, though: Thai segmentation in Meilisearch is great, but normalization still requires work.

We also recently improved Meilisearch’s Hebrew normalisation. Before that, we made good advances on Japanese segmentation, but want to polish Japanese normalization to better account for things like the differences between ツ and ッ,or ダメ and だめ.

Right now, Many is very interested in Chinese tokenization, a language that comes with its own set of challenges. For example, Chinese comes with a number of variants depending on the location where it’s spoken: Mandarin, mainland China’s various regional uses, and Cantonese, to name just a few. Though all these variations share mostly the same characters and meanings, they are not always used in the same way, can correspond to different sounds, and thus aren’t typed by everyone in the same fashion—all of which makes it really tricky when it comes to IR. At this point, we’re unable to have competing normalizations, which led us to base normalization on the dialect with the biggest number of users.

Future ambitions

Accommodating competing normalizations for the same language (the tolerance of multiple variants of a language) is one of Many’s top priorities right now.

He also feels the number of languages we support is too low, so an important step is devising strategies to get more contributors involved. You might ask: what languages Many wants to focus next? He tells us it’s a complex choice: he needs to balance his own personal preferences, the languages our contributors bring forward, as well as a strategic choice based on the number of speakers of a language.

Not all languages are equal, he explains. Some are easier to handle: agglutinative languages like Turkish, for example, could be next! “However, if a contributor would like to push forward their language, we are happy to consider their suggestion and reprioritize,” assures Many.

Next on the list: parsing accents, diacritics, and other non-spacing marks. Remember our “maïs” and “mais” examples earlier? At this point, the tokenizer simply remove accents, but some users may want to take these diacritics into account. Accomplishing this isn’t technically very complex, according to Many: in short, it requires establishing two distinct normalization processes—one loose (which overlooks accents) and one strict (which doesn’t ignore anything). The challenge is integrating them without slowing down the search terribly or doubling the size of the index.

How can I contribute?

Want to help? You can contribute in various ways, many of which have little to do with coding:

- Upvote a discussion: this helps Many prioritize a specific topic or language

- Create an issue on GitHub explaining language-related problems you experience: for example, if you notice Meilisearch is returning erroneous results when you search for a specific word or expression

- Know a language like the back of your hand? We’d love to hear your thoughts on challenges and possible solutions when creating segmenters and normalizers

- Propose tokenization libraries: it’s very helpful to know about existing tools you like and think works very well for a given language

- Submit a PR solving a problem you experienced when using Meilisearch with a specific language. Tip: clearly explaining the problem and the solution in the PR itself or in a GitHub issue definitely increases the chances of getting your contribution accepted

If you’re participating in Hacktoberfest right now, most of the issues listed on Charabia’s repository are eligible for the event! PRs without a corresponding issue are also welcomed—we’ll be happy to tag it as “hacktoberfest approved” if your contribution is accepted.

Last but not least: our work wouldn’t be possible without the fantastic input we receive from the community. Thank you so much for all your effort and generosity!